The massive investments in AI recently are starting to look like a bubble. Yes, of course, there will be a correction. But after technology bubbles, there is normally something useful left over that can be an economic powerhouse for decades to come.

In the 1800s, railroads were built all over the world, creating a lasting infrastructure that enabled huge economic growth. People forget that California tracks were built with narrow-gauge tracks, supporting the gold-mining bubble. Narrow-gauge rail was the cheapest way to build a track up a mountain to extract ore. The CAPEX of building the tracks was more important than the poor OPEX of driving trains slowly down the mountain. But these railroads were left as obsolete relics when the world of transportation moved on…they used the wrong standard and were located in the wrong places for modern use.

Similarly, in the 1990s, we experienced another huge infrastructure buildout with fiber construction across the United States. The dot-com bubble burst in 2000-01, but the fiber remained in the ground. It forms the backbone of our “information superhighway” today. We’ve made multiple upgrades to the transceivers, but the glass fiber is the same one that was put in the ground 30 years ago.

In both cases, the “edge” of the railroad and fiber networks were discarded after a short time and replaced by something else. But the central backbone remained. The Trans-continental Railroad through Promontory Summit remains a key trade route today. The fiber bundles between San Francisco and Chicago are heavily used as well.

Consider the AI boom that’s happening now. Everyone knows that it’s a bubble…but the players continue to push forward, each hoping that his piece will be the lasting infrastructure that remains after the bubble bursts.

Think about this: The GPU itself is like a train car, or like a fiberoptic transceiver. It will be useful for a few years, and then upgraded to something better. The GPU will not be the lasting infrastructure…but the physical infrastructure will be re-used. The data center, the hydro or geothermal power plant, and the fiber connecting it will be the equivalent of railroad tracks or fiberoptic cable….assets that create a lasting economic platform. Again, it’s the centralized infrastructure that will remain, because it’s very hard to predict exactly what will be needed at the edge.

Looking at AI applications, the most important latency-sensitive and security-sensitive computing is happening in the device:

- We’re running AI models in our phones;

- New cars have huge computing horsepower to optimize their physical horsepower, and

- AI/AR glasses are getting much more appealing to the consumer.

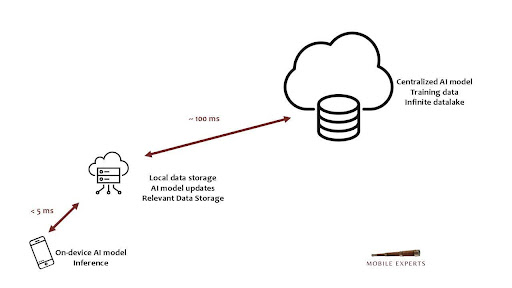

These applications are running inferences locally on the device, supported by gigantic AI models in centralized data centers, thousands of miles away. That leaves us with very little “edge computing” in the middle. As we lay out in detail in a new report released this week, there is a role for telcos in “Sovereign AI” or “Sovereign Edge Computing” to satisfy the desire for national control over computing workloads. But the nature of today’s applications simply don’t require neighborhood-level edge computing at low latency. Investment at the national level, and in the device, but a desert in between.

Things will change.

One thing that’s likely to change: AI models will need updates from huge pools of training data. The devices won’t be able to store all of the data needed, so the smaller models running on a device will need faster and faster access to data from a more central datalake and AI model.

That’s when the 5G/6G vision for the mobile network will finally happen. It’s not happening yet…we all can see that there’s no revenue for low-latency GPU-as-a-service today, so telcos spending $15-20K on an ARC computer for every cell site would be a waste. But as the models improve, the inference engine on the device will need richer inputs. We won’t be willing to make AI models wait 100 ms or more for every data grab…so this will lead to a need for something in the edge of the network.

Will this take 5 years? 10 years? I don’t know yet.

What I can say is that wireless follows a pattern. First, a business develops hundreds of billions in revenue with wires–and then we take the wires away. It happened for voice, then for email, and then for Internet and finally video. We now can do anything on a wireless device that was done on wires.

Why would we think that AI would develop in a different way? It seems clear that the AI business model and ecosystem will develop with huge trillion-dollar centralized models, loosely coupled with smaller models on handheld devices. When that market gets big enough, the applications will start to require something between the central AI model and the handset…some resource in the dead zone between the two extremes.

I believe that the Nvidia/Nokia partnership could be very important in 2035. I don’t think that it’s wise for Nokia to bet their entire mobile division on the ARC platform in the near term. But it could lead to the right strategic product in the long term.