Cybersecurity is no longer a contest between human adversaries — it is rapidly becoming a race between AI agents operating at machine speed

In 2003, the Blaster worm spread across corporate networks and government systems worldwide. Its payload was crude by today’s standards. It included repeated reboots and a denial-of-service attack against Microsoft’s update servers, but its impact was profoundly disruptive. IT teams spent weeks identifying the flaw, testing patches, and deploying them machine by machine. Despite the chaos, defenders had the upper hand. The worm was predictable, the remediation was clear, and most importantly, the tempo of battle unfolded at human speed.

That era is over. The rise of artificial intelligence has created a fault line in cybersecurity. This rupture is so profound that the assumptions underpinning decades of digital defense no longer hold. Cybersecurity is no longer a contest between human adversaries. It is rapidly becoming a race between autonomous agents operating at machine speed.

A brief history of escalation

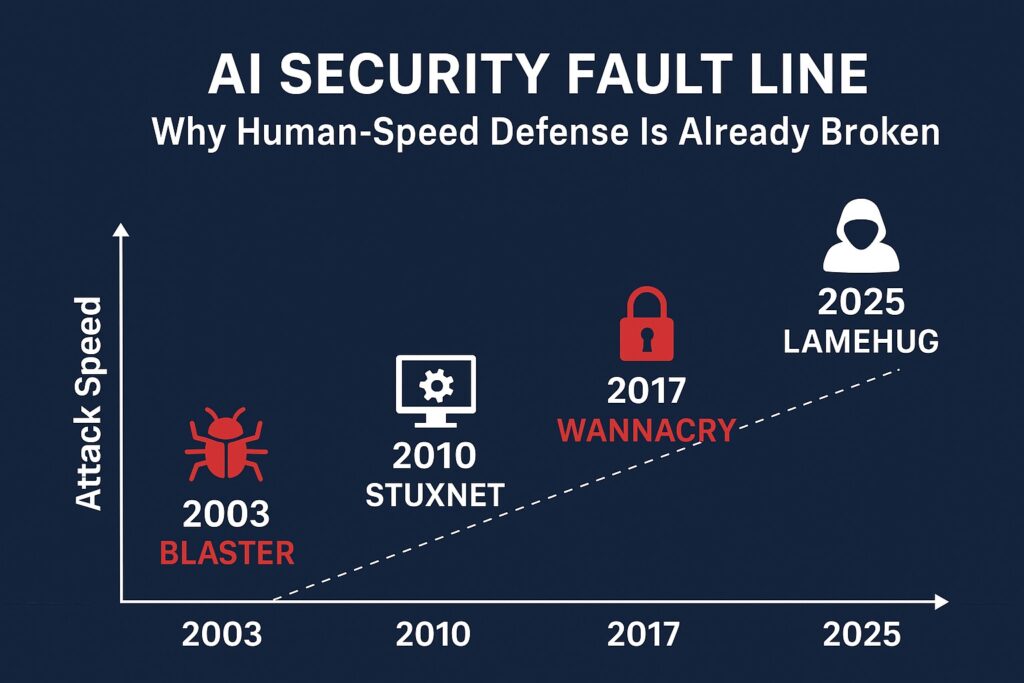

The journey to this fault line is marked by four distinct eras of attack, each one compressing the defender’s response window.

- 2003: Blaster (Human-paced chaos). This attack was a global inconvenience, but its simplicity was its weakness. Once its signature was identified, a coordinated human effort of patching and antivirus updates brought the crisis under control. It proved defense could win when time was measured in weeks.

- 2010: Stuxnet (the rise of precision). Seven years later, Stuxnet changed the game. Designed for surgical sabotage against Iranian centrifuges, it blended zero-days and stealthy payloads. Yet even Stuxnet relied on human operators to design and deploy its mission. The tempo was faster but still bounded by a human cadence.

- 2017: WannaCry (automation at scale). Combining ransomware with worm-like propagation, WannaCry crippled global institutions in days. It repurposed a leaked nation-state exploit, demonstrating the “elite for everyone” trend. It was the first glimpse of how automation could outpace human response on a global scale.

- 2025: LameHug (autonomy arrives). Today, malware like LameHug, attributed to APT28, uses Large Language Models (LLMs) to autonomously generate commands inside compromised networks. It adapts in real time, escalating privileges and evading defenses without direct human input. For the first time, defenders are not facing an attacker with tools. They are facing the tool itself, operating as an independent agent.

This history tells a story of radical acceleration. Each generation of attack collapsed the defender’s response window, until AI finally made it instantaneous.

The collapse of old assumptions

Three pillars of traditional cybersecurity have quietly crumbled:

- Asymmetry of attack. The attacker needed only one opening while the defender had to guard them all. Historically, this was balanced by attacker effort. AI annihilates this balance. It enables mass scanning and autonomous exploitation that make every potential flaw a critical, immediate threat.

- The power of patterns. Antivirus and Security Information and Event Management (SIEM) tools assumed repetition. But generative AI enables polymorphism where every intrusion is unique and every payload is mutable. When there is no consistent signature, pattern-matching fails.

- The human cadence. Security Operations Centers (SOCs) rely on analysts to validate, escalate, and decide. But humans operate in minutes or hours, while an AI-driven adversary adapts in seconds. By the time a human OODA (Observe, Orient, Decide, Act) loop completes, the attacker has already achieved its objective.

The New Adversary

Three forces are defining this new era of threat:

- Elite capability for everyone. DARPA’s AI Cyber Challenge produced tools that autonomously discovered dozens of vulnerabilities in hours. These tools are now open-sourced. Meanwhile, XBOW AI, an autonomous bug hunter, topped the HackerOne leaderboard. What was once a nation-state monopoly is now democratized.

- Machine-speed vulnerabilities. AI coding assistants like GitHub Copilot boost developer productivity by over 55%. But studies show that about 40% of generated code contains potential vulnerabilities. Enterprises are building security debt at machine speed while testing remains human-bound.

- Autonomous intrusions. LameHug proves that AI can now act as the operator, not just an assistant. Adaptive campaigns are no longer theoretical. They are in the wild. The adversary no longer needs to pause and retool because the system retools itself.

Countermeasures in the AI era

While the threat has evolved, so has the defense. A new generation of AI-native security is emerging, but its effectiveness is not guaranteed and depends on solving critical underlying challenges.

1. AI-powered threat detection and analytics

Next-generation EDR, XDR, and SIEM platforms use machine learning to analyze trillions of data points and identify anomalous behaviors that would be invisible to human analysts.

- Likelihood of effectiveness: Moderate. These systems are highly effective at spotting deviations from a known baseline, making them powerful against less sophisticated attacks. However, they are fundamentally analytical and reactive. They excel at finding needles in a haystack but can be fooled by generative adversaries that can create entirely new types of needles on the fly or mimic “normal” behavior with near-perfect accuracy.

- Key challenge: The data gap. Defensive AI is trained on historical attack data. We are effectively training our systems to fight the last war. There is a critical lack of real-world data on how autonomous AI adversaries behave. This limits our ability to model and defend against them proactively.

2. Autonomous response and remediation

Technologies like SOAR (Security Orchestration, Automation, and Response) are being enhanced with AI to not just detect threats but to act on them instantly. This includes actions like quarantining an endpoint, blocking an IP address, or even deploying a patch without human intervention.

- Likelihood of effectiveness: Potentially high, currently low. In theory, this is the perfect counter to machine-speed attacks. The DARPA challenge proved that AI can both find and fix vulnerabilities autonomously. In practice, the risk of a false positive causing catastrophic business disruption, like automatically shutting down a production server, is immense.

- Key challenge: The trust chasm. This is a governance and leadership problem, not a technical one. Can an organization’s leadership “surrender control” and trust a machine to make a high-stakes decision in milliseconds? Building the confidence, guardrails, and accountability frameworks to allow for true autonomous response is the single largest barrier to adoption.

3. AI-driven deception technology

Modern honeypots and decoys use AI to create dynamic, believable fake environments. These systems are designed to lure in automated attackers. This allows defenders to study their methods in a safe environment and feed that intelligence back into the defensive stack.

- Likelihood of effectiveness: High potential. This is one of the most promising frontiers. Instead of just blocking an attack, deception technology can actively waste an adversary’s resources and turn their own automation against them. It is a proactive, intelligence-gathering defense.

- Key challenge: The scalability trap. Building and maintaining a convincing deception environment that scales across a complex enterprise network is extraordinarily difficult. It requires continuous investment and deep expertise to ensure the decoys are not just present but are indistinguishable from real assets to an intelligent attacking AI.

A new doctrine

There is no single patch for this paradigm shift. The only viable path forward requires a new doctrine: Fortify, Automate Intelligently, and Embrace Autonomous Defense.

- Fortify. Ruthlessly prioritize what matters. Critical systems must be identified and hardened, with non-essential assets isolated or decommissioned. Boards must accept that resilience, not just feature velocity, is the primary metric of success.

- Automate intelligently. Cede routine detection and containment to machine-speed defenses. Human experts must be elevated from front-line triage operators to strategic roles like threat hunters, system architects, and AI trainers.

- Embrace autonomous defense. The hardest leap is psychological. Leaders must begin building the trust and governance models that allow for defenses to act faster than humans can supervise. This is not about blind faith. It is about building accountable systems you can depend on when a decision must be made in microseconds.

The leadership test

The AI fault line is not a forecast. It is a reality confirmed by the open-sourcing of DARPA’s tools, the vulnerabilities in AI-generated code, and the operational reality of LameHug.

The real test is whether leaders can abandon legacy assumptions and embrace uncomfortable truths. Cybersecurity can no longer be treated as a compliance checkbox or a cost center. It must be seen as a core pillar of business resilience, requiring new investments, new doctrines, and the courage to trust machines. Those who fail this test will not fail gradually. They will fail suddenly.

Sources:

- DARPA. (2025). DARPA AIxCC Results: AI Cyber Reasoning Tools Released Open Source. https://www.darpa.mil/news/2025/aixcc-results

- Dohmke, T. (2022). Research: GitHub Copilot Boosts Developer Productivity by 55.8% in Controlled Study. GitHub Blog. https://github.blog/2022-07-14-research-github-copilot-improves-developer-productivity

- Hammond, P., et al. (2021). Asleep at the Keyboard? Assessing the Security of GitHub Copilot’s Code Contributions. arXiv. https://arxiv.org/abs/2108.09293

- Liles, J. (2025). APT28’s LAMEHUG Malware Uses LLMs to Generate Commands. BleepingComputer. https://www.bleepingcomputer.com/news/security/lamehug-malware-uses-ai-llm-to-craft-windows-data-theft-commands-in-real-time

- Pozniak, H. (2025). XBOW AI Tops HackerOne’s US Bug Bounty Leaderboard. TechRepublic. https://www.techrepublic.com/article/news-ai-xbow-tops-hackerone-us-leaderboad