How will mobile operators use AI/ML to boost capacity and energy efficiency in the radio network? The PR wars have started, and people will stir up a lot of questions. CPU? GPU? What about the new ARC Compact? Does the RAN have to be virtualized? Is Open RAN going to be compatible with AI-for-RAN? Is all of this just a re-hash of edge computing, which failed to launch ten years ago?

Yes, the PR wars will rage on along these lines for several years, but—spoiler alert!— I can give you the answers now, as the economics make the answers pretty clear today. Mobile Experts published its comprehensive analysis a few days ago.

First of all, we investigated the technical aspects of AI-for-RAN. We segmented the benefits into two groups:

- Real-time capacity improvements in channel state estimates, link adaptations, interference cancellation, scheduler tweaks, and traffic grouping/steering.

- Non-realtime capacity improvements in traffic steering, RET adjustments, beam shaping, and beam coordination.

We see this real-time vs. non-realtime segmentation as the most important way to think about each AI algorithm, as the implementation is quite different in a real-world network. Real-time adjustments can be done in a lightweight CPU at each site, or with a slimmed-down GPU product like ARC Compact in a localized approach, such as one ARC Compact server for every five or ten sites. Of course, non-realtime tweaks can be done from a big centralized rack of computing. We looked closely at the model sizes for each of these AI algorithms, to determine whether each tweak needs to be local or whether it can be controlled remotely.

Secondly, we looked at the economics of AI-for-RAN. Along these lines, the most important impact for the CFO comes from boosting capacity in the RAN. Yes, there are energy savings and some latency or jitter reductions. But nothing compares to a 30% boost in uplink capacity. The CFO will kiss your feet if you’re the engineer that tells him that he doesn’t have to spend $20B for spectrum and radios.

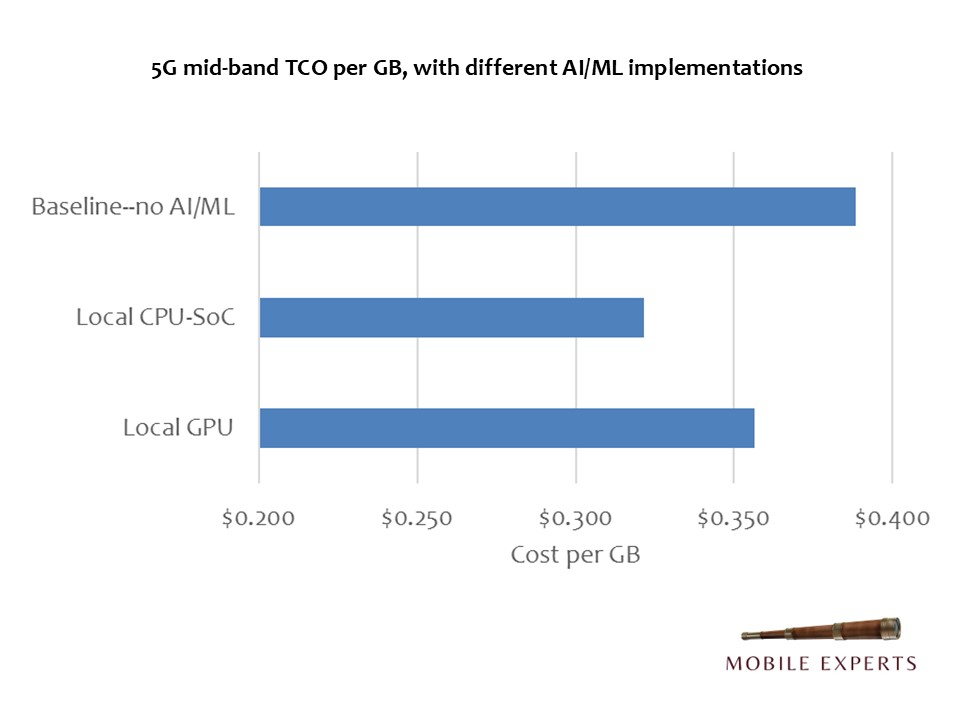

Our TCO calculations show that a conservative 20% boost in capacity can drop the cost of each GB of data from $0.38 to $0.32, using tools that come practically free from the network vendors. Another option is to deploy ARC Compact in every neighborhood…not a bad option if real estate and fiber allow it.

Third, we have taken a close look at the demand for mobile capacity, and we now have some hard data to firm up our forecasts of uplink traffic in the mobile network. Multiple use cases drive uplink traffic: Video chat, IoT, FWA, AI smartphone apps, and AR/XR. We have been able to quantify these much more precisely over the past six months, and you can find our focused report on Smartphone AI Applications in a separate report.

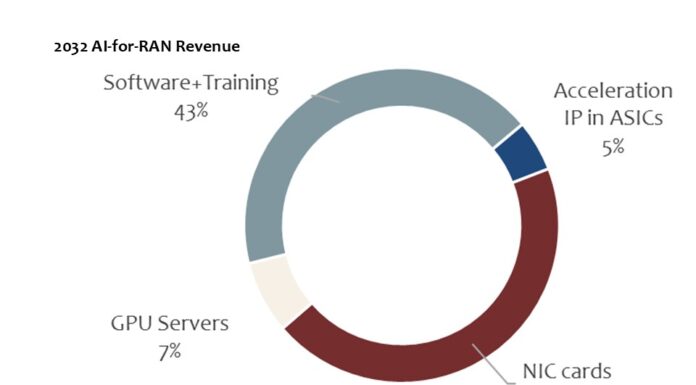

Finally, we built a forecast for CPU and GPU sales in the AI-for-RAN market over the next seven years. (Why seven years instead of our usual 5 years? Because we wanted to show how it will change with vRAN deployments after 6G spectrum is released in 2029-2030). Actually, the full forecast shows the full spectrum of options, from IP blocks for AI/ML embedded in a network vendor’s ASIC to NICs in a vRAN environment and GPU-based servers. It also looks at the rise of software revenue, from AI/ML algorithms embedded in the RAN software to rApps, software, and training in the GPU environment.

Overall, we see two competing philosophies: Some operators will work on real-time AI/ML improvements first, working closely with their vendors. Other operators are more focused on offering “GPU-as-a-service” and will start with a big rack of Grace Blackwell servers or possibly a slimmed-down rack with ARC Compact, in hopes of achieving both RAN improvements and creating new revenue. In our ROI calculation, we looked at both of these cases.

In the end, I believe that the real-time improvements from cheap and simple CPU implementation will yield big benefits for the network, and will become nearly universal. Additional improvements from non-realtime algorithms will come along in time, as each operator comes up with a plan for centralized GPU deployment.

Ten years from now, we believe that the roadmaps for Edge AI and AI-for-RAN will converge, making investments in Blackwell or ARC Compact a good idea. Until then, the operators can get the performance that they need on some light CPUs at the site.