Omniverse digital twins bring AI into the real world using new Nvidia hardware and software

Nvidia Corp. founder, President and CEO Jensen Huang used the company’s keynote address of its GTC developer conference to frame Nvidia’s Omniverse as the software backbone to drive the next generation of innovation in artificial intelligence (AI) and robotics. Nvidia is hosting its annual GTC conference virtually this year.

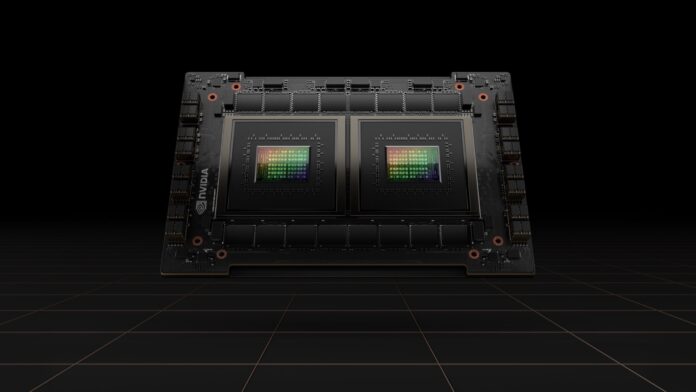

Nvidia made numerous hardware and platform announcements supporting those claims, starting with the new Hopper GPU architecture, the success to its popular Ampere GPU architecture which powered Nvidia’s A100 series AI data center infrastructure. Nvidia noted that the chip is named for Grace Hopper, the U.S. computer scientist whose pioneering work on machine-independent programming languages led to the creation of the COBOL computer language.

The new H100 chip is the foundation of Nvidia’s DGX H100 AI infrastructure systems, designed to provide 32 petaflops of AI performance at new FP8 precision — 6x more than the prior generation, according to Nvidia. The modular DGX H100 systems can be assembled in “SuperPODs” comprising up to 32 nodes totally 256 H100 GPUs working together.

Nvidia is using the DGX H100 to power its own “Eos” supercomputer which it claims will be the world’s fastest AI system when it finally goes online this year — even faster than Fugaku, the current reigning champ.

“For traditional scientific computing, Eos is expected to provide 275 petaflops of performance,” said Nvidia.

The company also introduced today its first discrete data center CPU for AI and high-performance computing based on Arm’s Neoverse architecture. This is the first Arm-related announced Nvidia has made since its called off plans in February to acquire the semiconductor maker from owner SoftBank in a transaction valued at $40 billion.

Nvidia’s Grace “superchip” comprises two CPUs linked using Nvidia’s NVLink-C2C high-speed and low-latency interconnect. The chip sports better performance than Nvidia DGX A100 with industry-leading efficiency, the company said. Nvidia predicts orders of magnitude better performance in large-scale AI and high-performance computing (HPC) using the new processors. Nvidia is offering the new interconnect to its manufacturing partners for custom silicon integration.

Digital twins help AI drive real world outcomes – literally

The Omniverse emerged from beta for the public after an enterprise launch last year. Huang introduced the Omniverse originally by borrowing language from Snow Crash, a seminal work of cyberpunk science fiction by Neal Stephenson. But the Omniverse as it exists today isn’t science fiction. Huang said it’s solving real-world problems.

Huang explained that NASA coined the term “digital twin” as a way to describe mathematical simulations of real-world objects and situations. The Omniverse is the realization of that idea. Practical uses for it abound as a shared collaborative space for people working on 3D models and environments for gaming and entertainment, for example. Or to solve 5G antenna placement issues for optimal 5G coverage, as in the case of Ericsson.

But Huang also presents the Omniverse as a digital twin proving ground to test artificial intelligence powering autonomous AI-driven systems in the real world. By simulating and improving artificial intelligence results, the Omniverse can help AI systems navigate the real world better, said Huang.

The Omniverse is central to Nvidia’s DRIVE platform, a full-stack autonomous vehicle platform the company has developed which combine optical sensors, AI, custom silicon, and more. Nvidia announced that its Drive Orin platform has entered production this month, its System on a Chip (SoC) designed for EV manufacturers building software-defined vehicles.