AI’s future depends less on algorithms and more on the physical realities of silicon, power, capital, and geopolitics

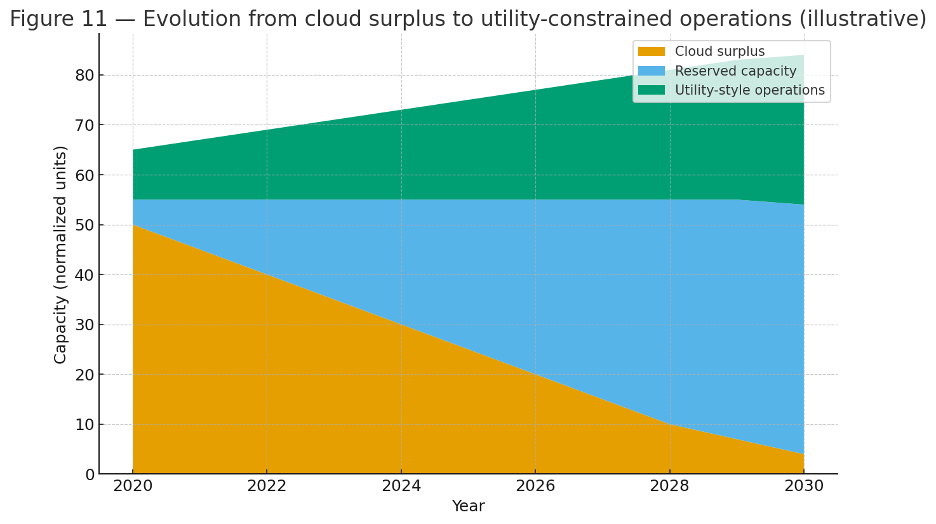

The artificial intelligence sector has entered a new industrial phase. For two decades, the cloud business model created the illusion that computing capacity was an elastic, on-demand resource. That model worked because capital was cheap, semiconductors were abundant, and energy was inexpensive. Today, each of those foundations is under stress.

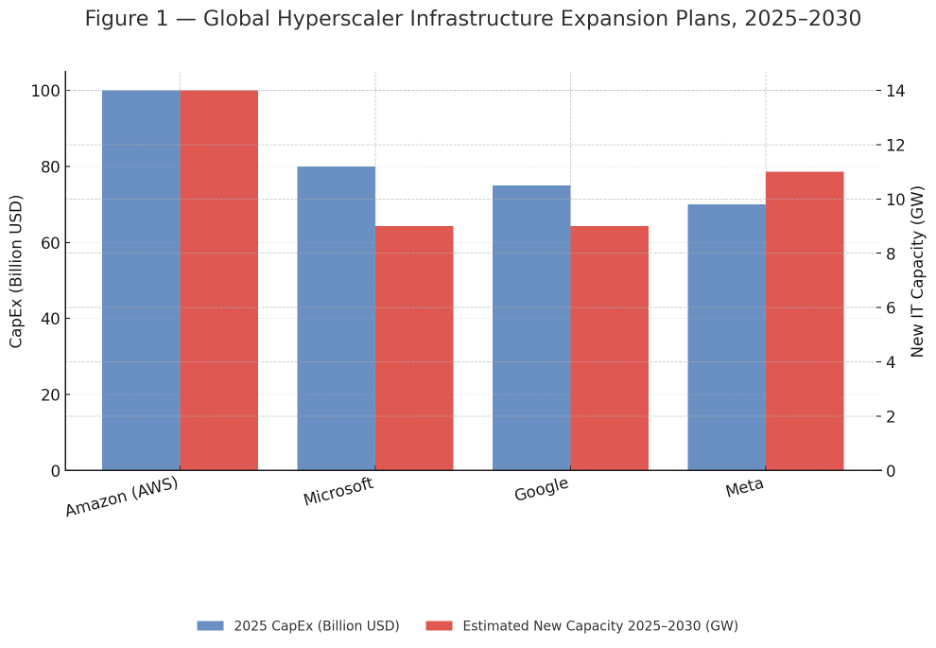

The October 2025 announcement of a strategic collaboration between OpenAI and Broadcom to co-develop up to 10 gigawatts of custom AI accelerators symbolizes this shift. The number itself is a projection, not a verified deployment, but it captures the industrial scale of ambition that now defines the field. What was once an exercise in software optimization is becoming an exercise in industrial logistics.

The real bottleneck: Semiconductors, not energy

Public debate often focuses on AI’s energy appetite. While data centers consume growing amounts of power, the more fundamental constraint lies in semiconductor supply.

Energy: Expanding, but unevenly usable

Global renewable generation capacity continues to expand rapidly. The International Renewable Energy Agency reported that in 2024, the world added 585 gigawatts of renewable power capacity, a year-on-year growth rate of about 15%. The International Energy Agency projects that global renewable capacity could almost double by 2030, reaching more than 4,600 additional gigawatts.

Yet capacity growth is not the same as reliable generation. Variability, transmission bottlenecks, curtailment, and the limited deployment of grid-scale storage mean that dispatchable power remains a limiting factor. For large AI data centers that must operate continuously, the constraint is often the quality and availability of power, not the headline quantity of installed capacity.

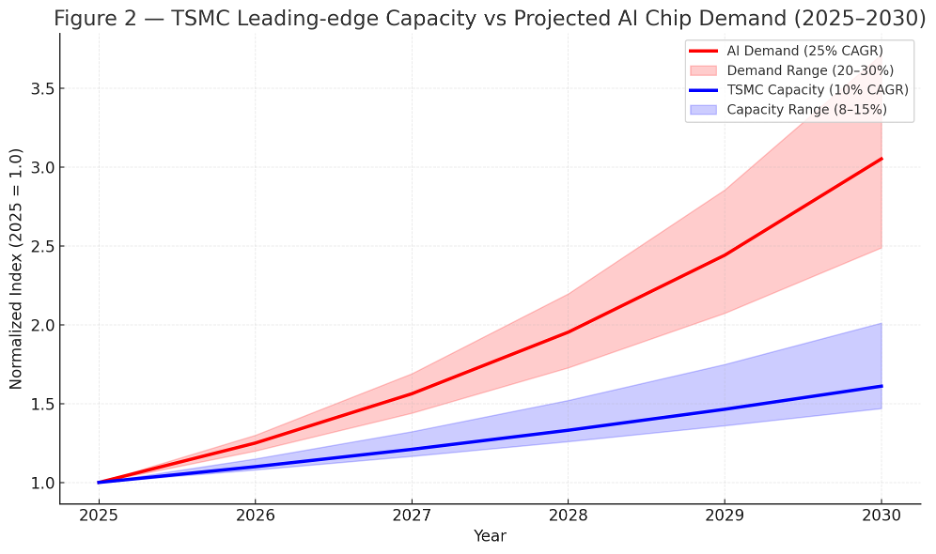

Silicon: The finite substrate of AI

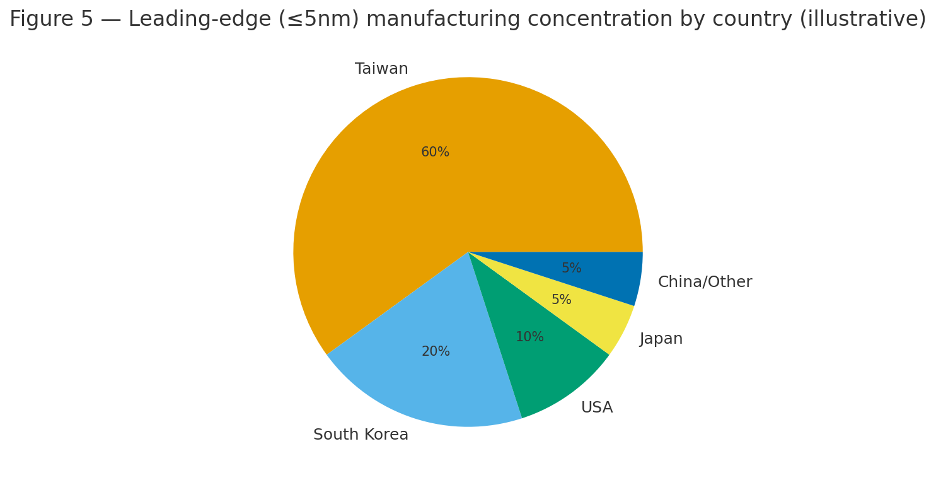

At the heart of AI computing lies a semiconductor supply chain with only a few viable actors. TSMC, Samsung, and Intel operate the world’s most advanced fabrication facilities, and TSMC continues to dominate external foundry production at the five-nanometer class and below. Building new capacity requires multi-year investments and access to extreme ultraviolet lithography systems that are available only from a single supplier, ASML.

Every wafer allocated to AI comes at the expense of another industry. This inelasticity makes semiconductor throughput the true bottleneck in scaling AI. Even if electricity were free and capital abundant, the absence of advanced wafer capacity would still cap global compute growth.

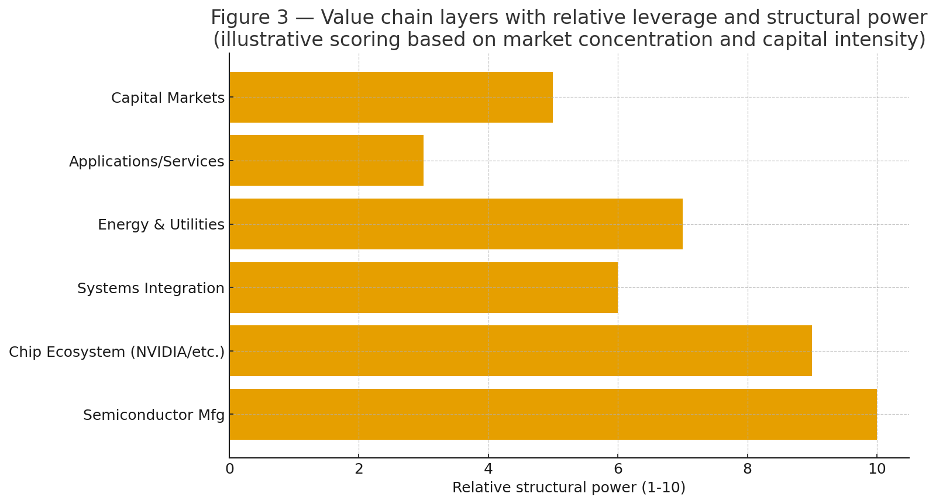

Value chain power and concentration

The AI value chain is highly asymmetric.

- Semiconductor design and fabrication hold the highest leverage because capacity is scarce and expansion is slow.

- System integration and software ecosystems, such as NVIDIA’s CUDA, remain strong but are facing new competition from Google’s TPUs, AMD’s ROCm, and emerging ASIC designs.

- Infrastructure operators, including the hyperscalers, manage siting and scaling but remain dependent on upstream suppliers.

- Energy providers exercise leverage where grids are tight or politically constrained.

- Applications and services are highly competitive and capture a smaller share of total value.

Economic power has migrated downward in the stack, toward physical manufacturing and energy availability rather than purely digital assets.

Modeling the scale of AI infrastructure

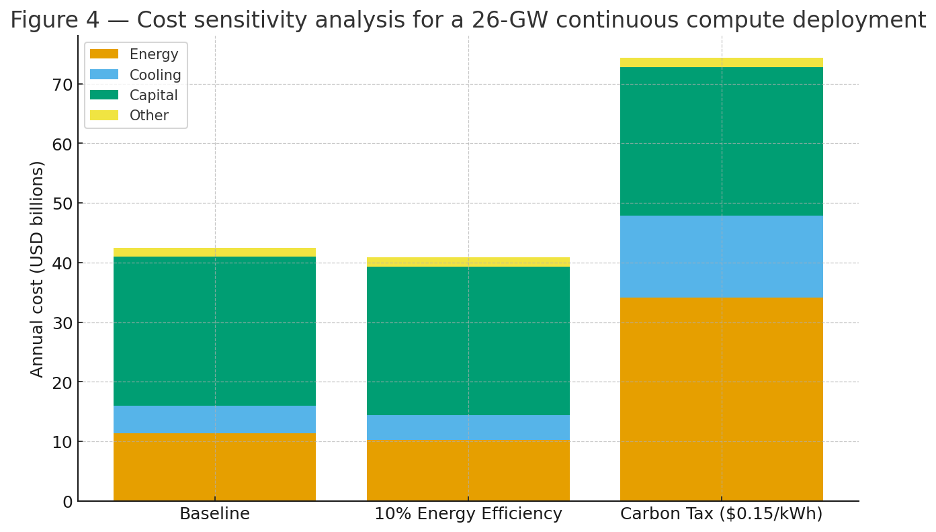

To illustrate the economics of hyperscale AI infrastructure, consider a notional deployment operating at 26 gigawatts of continuous load. This is a scenario model, not a forecast, but it demonstrates the scale of the industrial shift.

- Continuous 26,000 megawatts operating 24 hours a day equals roughly 228 terawatt-hours of annual consumption.

- At an average cost of five cents per kilowatt-hour, the annual energy expense would exceed 11 billion dollars.

- Adding cooling and operational overhead raises that to approximately 14 to 17 billion dollars.

- Hardware depreciation, maintenance, and amortized capital could add 20 to 30 billion dollars annually.

- The total operating cost of such a global system could therefore reach 35 to 50 billion dollars per year.

A 10% improvement in energy efficiency at this scale represents a savings of one to two billion dollars annually. Efficiency gains matter, but they do not fundamentally alter the economics of large-scale compute.

The fragile foundations of silicon supply

Semiconductor manufacturing remains geographically and technically concentrated. Advanced node production depends on a small number of suppliers of lithography tools, chemical precursors, and rare materials such as gallium, germanium, and tantalum. Export controls or geopolitical disruptions could sharply reduce available capacity.

TSMC’s leading-edge fabs are concentrated in Taiwan, a region exposed to both seismic and geopolitical risk. While expansions are underway in the United States, Japan, and Europe, those new fabs will take several years to reach volume production. The global AI economy therefore operates on a foundation that is powerful yet fragile.

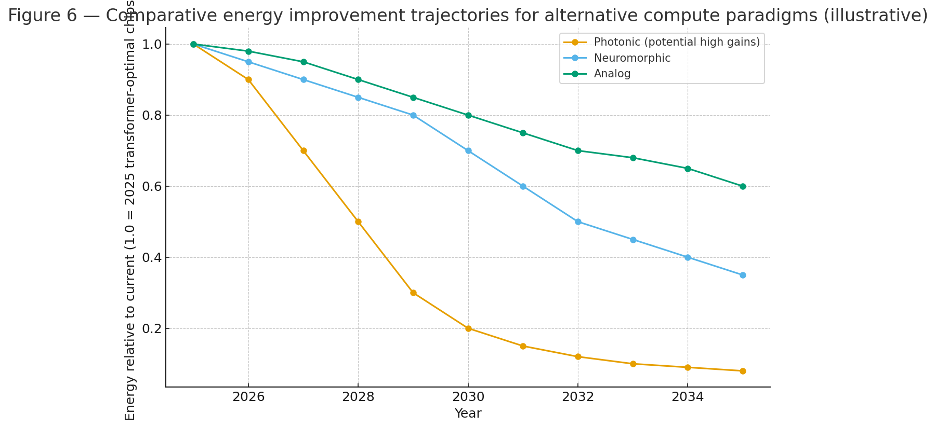

Innovation at the edge of physics

Researchers are exploring photonic, neuromorphic, and analog computing architectures that promise large energy reductions for specific workloads. Laboratory prototypes sometimes demonstrate one or two orders of magnitude better energy efficiency per operation. However, these systems remain far from commercial viability at the scale or reliability demanded by large language models.

Over the next three to five years, the more credible efficiency gains will come from continued advances within the digital domain: model sparsity, quantization, compiler optimization, and specialized ASICs optimized for transformer workloads. The long-term roadmap remains open, but near-term progress will be incremental rather than revolutionary.

The cost of carbon and the price of continuity

Some analysts have speculated about carbon pricing raising energy costs from five to fifteen cents per kilowatt-hour. That tripling is unlikely under any current policy regime. More realistic scenarios involve increases of one to three cents per kilowatt-hour in markets with carbon trading systems.

Even modest changes in power price have measurable impact on AI infrastructure economics. A 20% increase in electricity cost can reduce project internal rates of return by several points, depending on financing structure and load factor. For operators whose margins already depend on scale efficiency, such shifts can be material.

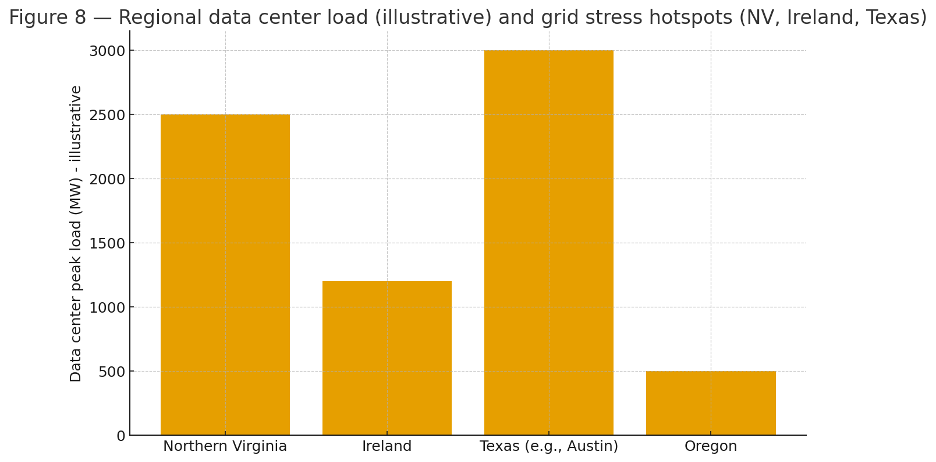

In regions such as Northern Virginia, Ireland, and Singapore, local grids are already showing strain. Data center demand is prompting utilities to adopt new pricing models, curtailment rules, or power allocation frameworks. The constraint is now regional grid resilience rather than global energy abundance.

The capital equation

AI infrastructure projects are highly leveraged. They assume access to long-term, low-cost financing and steady demand growth. A sustained rise in interest rates or capital cost can quickly erode project viability. When the cost of capital moves from 8 to 12%, a project designed for a 10% internal rate of return can become uneconomic.

This dynamic favors hyperscalers and sovereign investors that can secure funding at lower rates. Smaller entrants face higher volatility and may struggle to finance sustained deployments. The AI infrastructure race is therefore not only a technology contest but also a capital allocation contest.

Geopolitics and the new industrial order

Semiconductors have become instruments of national power. Export controls on advanced chips and lithography tools have already redrawn supply chains. Governments are competing to attract fabs through subsidy programs such as the U.S. CHIPS Act, the EU Chips Act, and Japan’s semiconductor initiatives.

As nations view compute capacity as a strategic asset, data centers and chip facilities increasingly resemble critical infrastructure. Some jurisdictions are beginning to consider AI compute under utility or national security frameworks. The boundary between technology and industrial policy is dissolving.

The rise of compute as a utility

The next phase of the AI economy will resemble a utility model more than a software market.

- Long-term procurement contracts will replace on-demand cloud scaling.

- Compute location will depend on access to reliable power and cooling, not on proximity to customers.

- Margins will normalize as infrastructure capital costs dominate.

- Vertical integration will accelerate as firms internalize chip design, manufacturing partnerships, and power sourcing.

Competitive advantage will depend on mastering industrial constraints rather than optimizing algorithms. Ownership of energy, silicon capacity, and interconnects will define strategic control.

Adaptation and resilience

The constraint economy is not static. Several adaptive forces are already visible:

- Leading foundries are building new capacity in the United States, Europe, and Japan.

- Hyperscalers are exploring small modular reactors, microgrids, and direct renewable procurement to secure power.

- AI models are becoming more efficient through sparse architectures and quantization.

- Open hardware initiatives and domain-specific accelerators are reducing dependence on any single vendor or software stack.

- Workloads are migrating to energy-rich regions and off-peak periods to exploit lower costs.

Constraints evolve through innovation, policy, and capital coordination. The actors that treat them as dynamic variables rather than fixed barriers will gain the long-term advantage.

The industrialization of intelligence

Artificial intelligence is no longer defined by code alone. It is built on mines, fabs, grids, and capital markets. The companies that master this industrial layer will define the next decade of technology leadership.

The AI era is not just about smarter algorithms. It is about building, financing, and governing the physical systems that make intelligence possible. The true measure of strategic strength is shifting from software velocity to industrial resilience.