Nobody ever said the Ethernet Roadmap was a direct route. When the 25G Ethernet Consortium (Arista, Broadcom, Google, Mellanox Technologies and Microsoft) proposed single-lane 25-Gbps Ethernet and dual-lane 50-Gbps Ethernet in July 2014, it created a big fork in the roadmap. Networks had to choose between sticking with the established progression from 10 GbE to 40 GbE or adopting the 25 GbE to 50 GbE approach. Prior to 2014, networks had built their migration plans around the 10 GbE to 40 GbE technology. The introduction of the 25G lane offered a lower cost per bit and an easy transition to 50G, 100G and beyond.

25G/50G lanes create new challenge

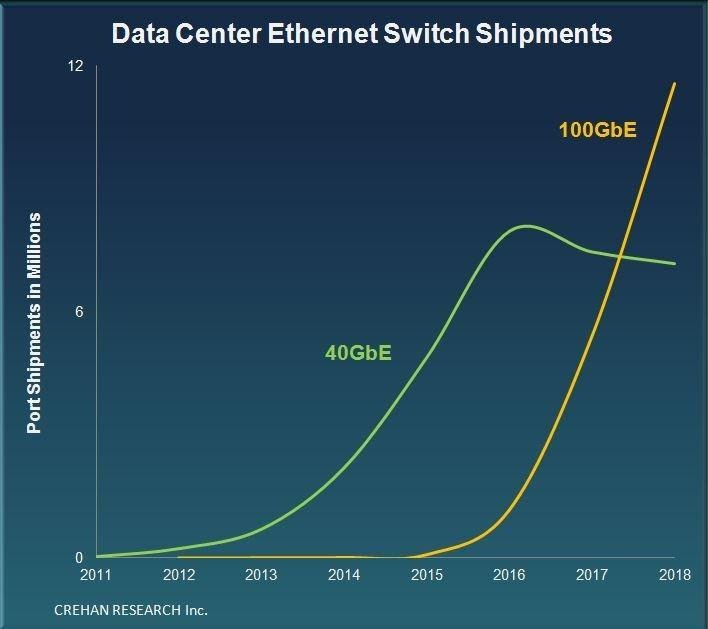

If port sales of the competing technologies are any indication, the industry has spoken. Boosted by the 25 GbE technology, sales of 100 GbE ports are on the rise while 40 GbE switches have been declining ever since 2016. Much of the growth in 25 GbE lane adoption is being fueled by larger hyperscale and cloud-based data centers where 100 GbE singlemode core-to-core links are standard and provide an easy jump to 400 GbE.

If port sales of the competing technologies are any indication, the industry has spoken. Boosted by the 25 GbE technology, sales of 100 GbE ports are on the rise while 40 GbE switches have been declining ever since 2016. Much of the growth in 25 GbE lane adoption is being fueled by larger hyperscale and cloud-based data centers where 100 GbE singlemode core-to-core links are standard and provide an easy jump to 400 GbE.

The increase in speeds is also being driven by new merchant silicon (ASIC) chips that enable the next generation of switches to support 32 ports of 400 Gbps, based on 50 GbE lane speeds.

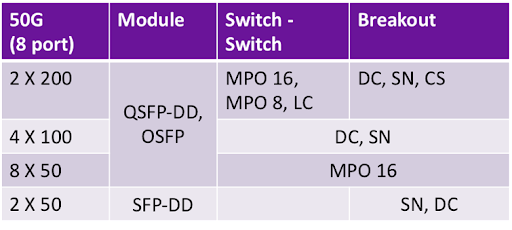

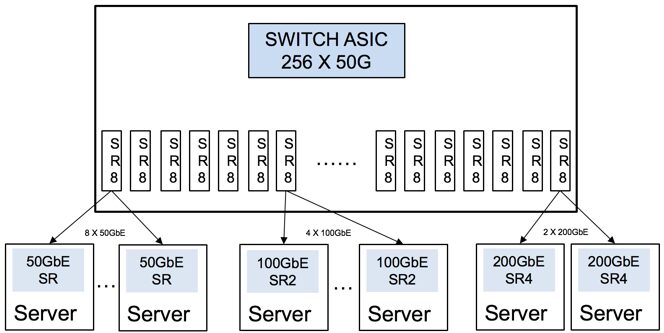

As switch ASIC chips get faster and add more switching capacity, data centers must find the most cost-effective way to distribute the increased capability to the front panel of the switch. The goal is to be able to support 100 GbE, 200 GbE and 400 GbE switch-to-switch links while also allowing these switches to provide the option of connecting more servers.

As switch ASIC chips get faster and add more switching capacity, data centers must find the most cost-effective way to distribute the increased capability to the front panel of the switch. The goal is to be able to support 100 GbE, 200 GbE and 400 GbE switch-to-switch links while also allowing these switches to provide the option of connecting more servers.

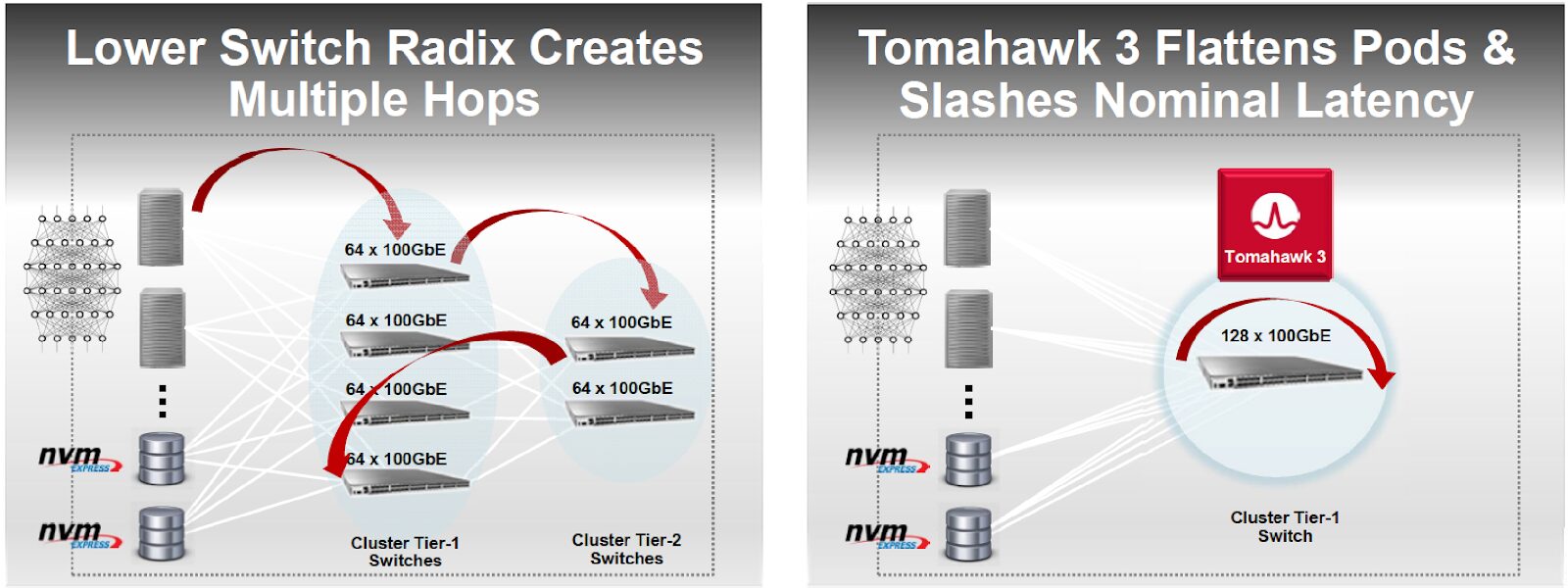

Servers connected through a single switch provide much lower latency–a key driver for application performance.

Source: Broadcom

The value of 16-fiber technology

Until recently, the primary method of connecting access switches and servers within the data center has involved 12- or 24-fiber connectivity—typically delivered using multifiber push-on (MPO) connectors. The introduction of octal technology (eight switch lanes per switch port) enables data centers to match the increased number of 50G lanes (currently 256 per switch ASIC).

Until recently, the primary method of connecting access switches and servers within the data center has involved 12- or 24-fiber connectivity—typically delivered using multifiber push-on (MPO) connectors. The introduction of octal technology (eight switch lanes per switch port) enables data centers to match the increased number of 50G lanes (currently 256 per switch ASIC).

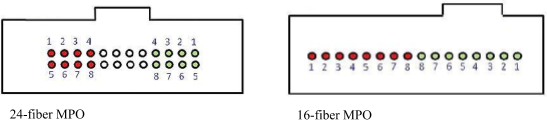

With 32 ports per 1U switch, network managers can provide bandwidth of 400G per port. This is where 16-fiber interfaces offer a distinct advantage over 12-fiber or 24-fiber solutions. A 12-fiber MPO supports a maximum of six lanes, while a 24-fiber MPO connector supports the required 16 fibers—eight transmit and eight receive—but leaves a third of the 24 MPO connections unused. The sweet spot, then, seems to be a 16-fiber MPO connector. Eight transmit and eight receive fibers, each running at 50 Gbps, would support 400 GbE while utilizing 100 percent of the connector’s capacity.

The value of the 16-fiber approach is the added flexibility it affords. It enables data centers to take a 400G circuit and divide it into manageable chunks using the current predominant technology of 50 GbE. For example, a 16-fiber connection at the switch can be broken out to support up to eight servers connecting at 50 Gbps, or up to four 100 Gbps servers with 100G connections.

16-fiber MPO connectors are just beginning to be deployed, with the broad market expected to emerge over the next 12 to 18 months. The 16-fiber MPO is nearly identical to existing 12- and 24-fiber MPOs. The main difference between them is that the pins of the 16-fiber connector are smaller, and the connector’s body is keyed differently to eliminate confusion with the 12-fiber or 24-fiber units. 16-fiber connectivity is supported with a 2 x 12-fiber (MPO24) configuration, or a single row of 16 fibers (MPO16). While the single-row MPO design aids polarity management, 12- and 24-fiber MPOs are more popular solutions.

As servers become more powerful (and power hungry), the number of servers per rack is expected to decrease. Newer 1U switches have the capacity to connect as many as 192 servers. Positioning these switches at the top of server racks results in unused (and very expensive) switch ports sitting idle at the top of the server rows. By using 16-fiber connectivity to combine new 400G switch ports, data centers can consolidate switching equipment into the middle or end of the server row and make better use of their available capacity.

At the same time, server connection speeds are increasing. In response, data centers are replacing direct-attach-copper (DAC) server connections with active-optical-cables (AOCs) whose fiber-based optics increase the reach of these connections. AOCs, however, use non-standard electronics integrated into the assembly. So, while the AOC extends the link span, the entire link has to be replaced any time speeds increase. A structured cabling approach using 16 fibers enables easy upgrades so only the transceivers need replacing. This flexible design accommodates server speed upgrades with lower capital equipment costs while maintaining the efficient, low-latency performance today’s server networks require.

Adding more tools to the toolkit

The IEEE recently completed the 802.3cm 16-fiber multimode standard (set for publication early in 2020) with the 400G SR8 designed for in-row 50G server connections. Singlemode schemes are also being developed with two 400G-DR4s in one package or eight 100G lanes on eight fiber pairs, which takes advantage of the 16-fiber technology.

With either multimode or singlemode, multiplexing also plays a role in meeting capacity and latency requirements. The IEEE also added a new SR4.2 400G multimode standard, which is essentially four 100G bi-directional (BiDi) links combined in a single module. When deployed on OM5 multimode, which is optimized for short wavelength division multiplexed (SWDM) links, this standard provides for link spans up to 150 meters—50 percent longer than currently available.

Ultimately, the right solution depends on a variety of variables—budget, location, application requirements and service-level agreements to name a few. And not everybody is trying to solve the same problems. Technologies like 16-fiber connectivity become another tool in the toolkit available to help data center operators get where they need to go as efficiently as possible.