Smartphones are getting smarter using artificial intelligence, which means smartphone-processor vendors must improve their AI platforms. To do so, the latest round of premium smartphones implement hardware-based AI accelerators, including Apple’s neural engine, Huawei’s neural-processing unit, and Qualcomm’s Artificial Intelligence Engine, which features the Hexagon vector processor among other on-device AI processing and acceleration capabilities. These features are already trickling down to some lower-cost phones; for example, Qualcomm offers the AI Engine in its mid-tier Snapdragon 710, 670 and 660 mobile platforms.

But most older or lower-priced phones don’t have special hardware for AI; these phones must rely on the existing CPU and GPU to perform AI tasks. Thus, developing AI apps that run on a range of different phones can be challenging. Optimizing for performance and battery life is also complicated, because some AI tasks run better on the CPU, whereas others work better on more specialized hardware.

Most AI applications today are powered by deep neural networks. The most popular tools for developing these networks are “frameworks” such as TensorFlow and Caffe/Caffe2, which create a high-level expression of a network that, in theory, run on any processor. In practice, a processor must have the right driver software to support that framework, and each framework requires a different driver. These drivers are often written for Linux-based servers; to simplify AI development on Android, Google has developed the Neural Networks API (NNAPI), first released in Android Oreo.

Qualcomm offers a suite of software tools to help AI developers target Snapdragon processors. The Qualcomm Neural Processing Engine (NPE) software comprises drivers for TensorFlow, Caffe, and Caffe2 as well as the Open Neural Network Exchange (ONNX) format and NNAPI. Each of these drivers can run on the CPU, the GPU, or the vector processor (if present), giving developers flexibility to optimize their applications.

For example, a simple neural network can run efficiently on the CPU using its built-in Neon acceleration, eliminating the time needed to move data to the other units. For larger networks, however, the GPU is more efficient, due to its powerful floating-point capabilities. The vector processor is the most power-efficient hardware, but networks must be converted to integer format before using this unit. Not all Snapdragon chips have a vector processor, so software must check whether it is available before using it.

Sophisticated software developers have additional choices. By dividing a neural network into layers, an application can run some layers on the CPU, other layers on the GPU, and yet others on the vector processor. This approach engages the maximum performance of the chip while allowing the developer to choose which layers are best suited to each hardware unit. Qualcomm even offers the Hexagon Neural Network library to allow developers to create their own AI algorithms that run directly on the vector processor.

Smartphones today mainly use AI for only a few tasks, such as face unlock and scene detection. The latter feature helps the camera to automatically adjust the exposure and focus for the situation, for example, a landscape shot versus a photo of two people. The phone vendor, not an app developer, generally creates the software for these features, simplifying their deployment.

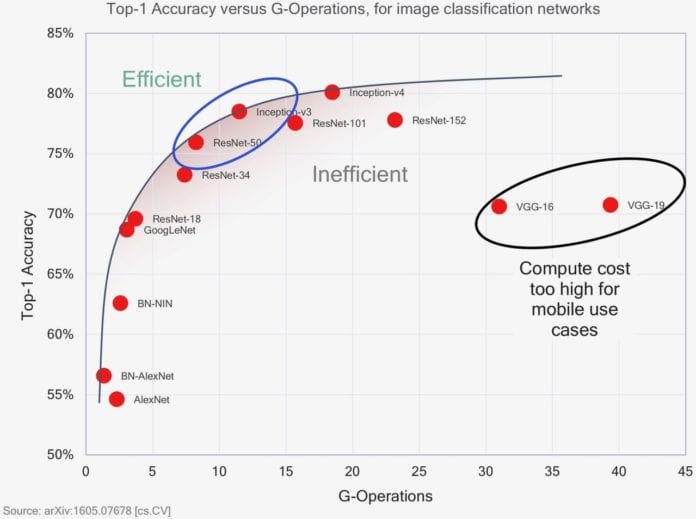

The performance requirements for more sophisticated AI functions escalate rapidly, as the chart shows. Many neural networks are trained for image classification, for example, recognizing types of animals or flowers. Classifying a single image can require billions of arithmetic operations; running this network on a CPU is slow and burns a lot of energy, particularly if the classification is repeated on multiple images or even on a video stream. In contrast, the vector DSP in the Snapdragon 710 can classify more than 30 images per second using the Inception-v3 or ResNet50 networks while minimizing the power drain. Thus, the hardware accelerator plays a crucial role in enabling advanced AI capabilities.

AI software is also progressing rapidly. The deep neural networks used today have only become popular in the past few years, so data scientists are still finding new ways to optimize their performance. Processor vendors are also working to improve their drivers to better map the changing software to their hardware architectures, which in many cases are also evolving. For example, Qualcomm reports that it has doubled AI performance over the past 12 months simply through software improvements.

So far, few mobile apps use neural networks, but tools such as Qualcomm’s are helping spur developers to improve their products. For example, Tencent has used AI functions to improve the frame rate of its mobile game called High Energy Dance Studio. We see many other use cases for AI on phones, including augmented reality, identifying people and products, and on-device voice assistants. These new applications will drive demand for smartphones with better AI performance.

Linley Gwennap is Principal Analyst at The Linley Group and editor-in-chief of Microprocessor Report. Development of this article is funded by Qualcomm, but all opinions are the author’s.